AI detection tools are designed to identify writing generated by artificial intelligence. They analyze patterns, word choice, and structural features to estimate whether content is AI-produced. Some platforms, like Turnitin, have integrated AI detection features. However, at the University of Nebraska at Kearney, we do not recommend using AI detectors as definitive proof of academic dishonesty. These tools often lack reliability and can lead to misidentifications.

Understanding how these tools work and recognizing their limitations is essential for informed decision-making. Below, we explore how AI detectors function, their shortcomings, and alternative strategies for addressing concerns about AI-generated student work.

AI detection tools are themselves powered by artificial intelligence. They evaluate written work based on specific indicators, such as repetitive phrasing, sentence structure, and vocabulary patterns. These tools typically generate a percentage score, such as “73% human-generated,” indicating the likelihood of the content being written by a person.

However, the lack of clear standards for what constitutes an "acceptable" percentage raises significant challenges. Additionally, detection tools can produce:

AI detectors are far from foolproof. Studies have shown that many tools have accuracy rates below 70%, with some as low as 38%. Even the highest-rated tool, with 90% accuracy, misidentifies 1 in 10 submissions. These errors can have significant consequences for both students and instructors.

Yes, students can easily trick AI detectors by:

These strategies undermine the reliability of detection tools and highlight their limitations as a method for ensuring academic integrity.

Example Assignment

In my online Introduction to Psychology course, students complete weekly reflection assignments based on the topic of the week. The reflection consists of two questions:

This assignment is designed to help students engage with the material, assess their understanding, and provide an opportunity for them to seek clarification. The topic for this specific week was cognitive development theories.

Here is one student’s submission:

"The intricate processes underlying cognitive development are both fascinating and profound. Exploring Piaget's stages of cognitive development has illuminated how individuals acquire knowledge and adapt to their environments. It is astonishing to observe how seemingly simple mechanisms, such as assimilation and accommodation, fuel the evolution of human cognition. However, the interplay of cultural and societal influences on cognitive growth leaves much to be unpacked, as these layers reveal the nuances of human development and behavior."

When I read this submission, I immediately suspected it was generated by AI because:

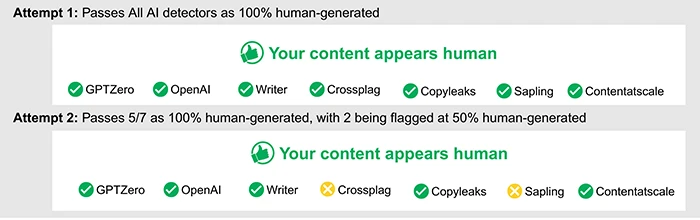

To confirm my suspicions, I used an AI detection tool on two separate occasions with this exact text. The results were inconsistent:

Key Takeaways

Key TakeawaysThis example highlights the unreliability of AI detection tools and the challenges they present in identifying AI-generated content. Instead of relying solely on detectors, I compared this response to the student’s previous submissions and noticed a significant difference in tone and complexity. I then discussed the assignment with the student to better understand their learning process and clarify expectations for future reflections.

Given the limitations of AI detection tools, here are alternative approaches to address concerns about AI usage in student work:

While current detection tools are unreliable, advancements in technology may improve their accuracy in the future. Until then, fostering open communication with students, revising assessments, and emphasizing academic integrity remain the most effective strategies.

Special thanks to Cassie Mallette, Program Manager for the AI Learning Lab and Senior Instructional Designer at the University of Nebraska at Omaha, for taking the time to meet with our team and openly share resources and lived experience of the UNO team with us. Your contributions to this project and partnership are greatly appreciated!